In the previous section, we used an analogy with words to build our first neural network to infer node embedding (the DeepWalk algorithm). Surprisingly enough, we can also obtain information about a text by turning it into a graph and applying GNNs to it.

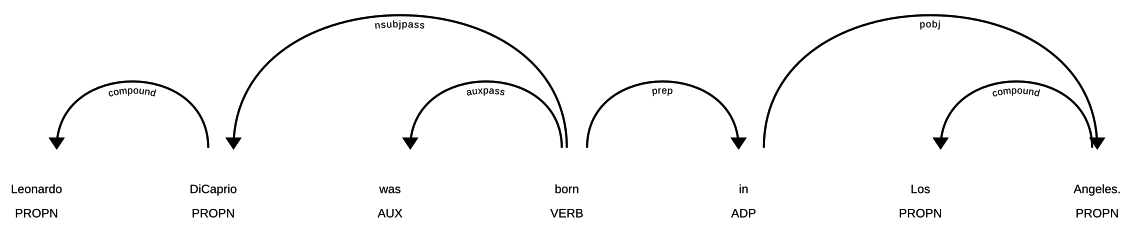

To understand why graphs are important in NLP, look at the following diagram, which is repeated from Chapter 3, Empowering Your Business with Pure Cypher:

The preceding diagram shows the relationships between words in a sentence, and it is clearly not linear.