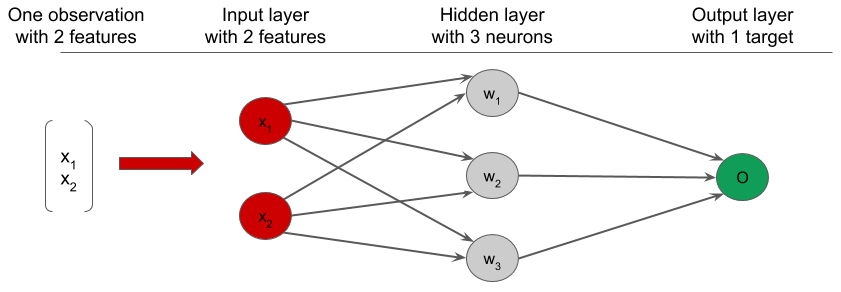

In order to understand how neural networks work, let's consider a simple classification problem with two input features, x1 and x2, and a single output class O, whose value can be 0 or 1.

A simple neural network that can be used to solve this problem is illustrated in the following diagram:

The neural network has a single hidden layer comprising three neurons (the middle layer) and the output layer (right-most layer). Each neuron of the hidden layer has a vector of weights, wi, whose size is equal to the size of the input layer. These weights are the model parameter that we will try to optimize so that the output is close to the truth.

When an observation is fed into the network through the input layer, the following happens:

- Each neuron of the hidden layer receives all the input features.

- Each neuron of the hidden layer computes the weighted sum of these features, using its weight vector and bias. As an example, the output of the first...