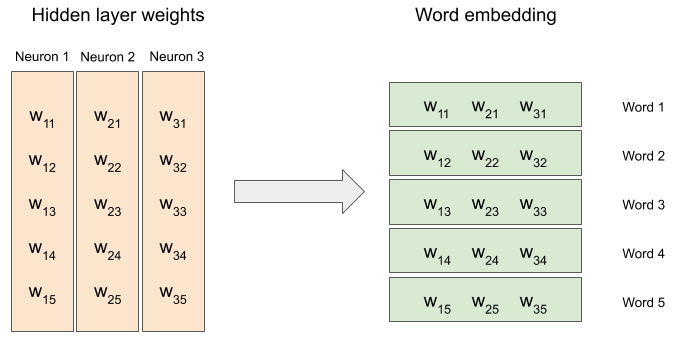

The skip-gram neural network architecture contains one single hidden layer. The number of neurons in that layer corresponds to the dimension of the embedding space or the number, d, of features following dimensionality reduction. The following diagram illustrates how we can go from the neuron weights in the hidden layer (one vector of length N for each of the d neurons, the vertical vectors) to the word embedding, N vectors of length d (the horizontal vectors on the right):

Since the skip-graph network has a single hidden layer, the next step in the calculation flow is the output layer.