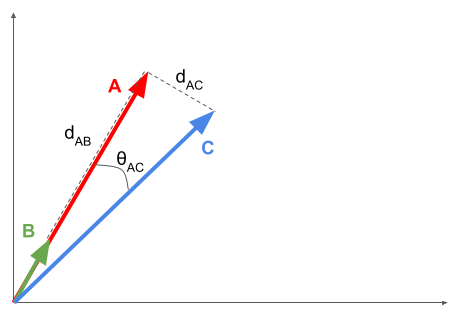

Cosine similarity is well-known, especially within the NLP community, since it is widely used to measure the similarity between two texts. Instead of computing the distance between two points like in Euclidean similarity, cosine similarity is based on the angle between two vectors. Consider the following scenario:

The Euclidean distance between vectors A and C is dAC, while θAC represents the cosine similarity.

Similarly, the Euclidean distance between A and B is represented by the dAB line, but the angle between them is 0, and since cos(0)=1, the cosine similarity between A and B is 1 – much higher than the cosine similarity between A and C.

To replace this example in the context of the GitHub contributors, we could have the following:

- A contributes to two repositories R1 and R2, with 5 contributions to R1 (x axis) and 10 contributions to R2 (y axis).

- B's contributions to the same repositories are: 1 contribution to R1 and 2 contributions to R2,...