Long short-term memory network

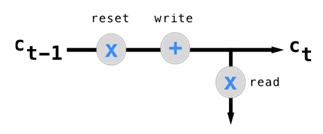

So far, we have seen that RNNs perform poorly due to the vanishing and exploding gradient problem. LSTMs are designed to help us overcome this limitation. The core idea behind LSTM is a gating logic, which provides a memory-based architecture that leads to an additive gradient effect instead of a multiplicative gradient effect as shown in the following figure. To illustrate this concept in more detail, let us look into LSTM's memory architecture. Like any other memory-based system, a typical LSTM cell consists of three major functionalities:

- Write to memory

- Read from memory

- Reset memory

LSTM: Core idea (Source: https://ayearofai.com/rohan-lenny-3-recurrent-neural-networks-10300100899b)

Figure LSTM: Core idea illustrates this core idea. As shown in the figure LSTM: Core idea, first the value of a previous LSTM cell is passed through a reset gate, which multiplies the previous state value by a scalar between 0 and 1. If the scalar is close to 1, it leads to the passing...