Chapter 8: Object Recognition to Guide a Robot Using CNNs

Activity 8: Multiple Object Detection and Recognition in Video

Solution

- Mount the drive:

from google.colab import drive

drive.mount('/content/drive')

cd /content/drive/My Drive/C13550/Lesson08/

- Install the libraries:

pip3 install https://github.com/OlafenwaMoses/ImageAI/releases/download/2.0.2/imageai-2.0.2-py3-none-any.whl

- Import the libraries:

from imageai.Detection import VideoObjectDetection

from matplotlib import pyplot as plt

- Declare the model:

video_detector = VideoObjectDetection()

video_detector.setModelTypeAsYOLOv3()

video_detector.setModelPath("Models/yolo.h5")

video_detector.loadModel()

- Declare the callback method:

color_index = {'bus': 'red', 'handbag': 'steelblue', 'giraffe': 'orange', 'spoon': 'gray', 'cup': 'yellow', 'chair': 'green', 'elephant': 'pink', 'truck': 'indigo', 'motorcycle': 'azure', 'refrigerator': 'gold', 'keyboard': 'violet', 'cow': 'magenta', 'mouse': 'crimson', 'sports ball': 'raspberry', 'horse': 'maroon', 'cat': 'orchid', 'boat': 'slateblue', 'hot dog': 'navy', 'apple': 'cobalt', 'parking meter': 'aliceblue', 'sandwich': 'skyblue', 'skis': 'deepskyblue', 'microwave': 'peacock', 'knife': 'cadetblue', 'baseball bat': 'cyan', 'oven': 'lightcyan', 'carrot': 'coldgrey', 'scissors': 'seagreen', 'sheep': 'deepgreen', 'toothbrush': 'cobaltgreen', 'fire hydrant': 'limegreen', 'remote': 'forestgreen', 'bicycle': 'olivedrab', 'toilet': 'ivory', 'tv': 'khaki', 'skateboard': 'palegoldenrod', 'train': 'cornsilk', 'zebra': 'wheat', 'tie': 'burlywood', 'orange': 'melon', 'bird': 'bisque', 'dining table': 'chocolate', 'hair drier': 'sandybrown', 'cell phone': 'sienna', 'sink': 'coral', 'bench': 'salmon', 'bottle': 'brown', 'car': 'silver', 'bowl': 'maroon', 'tennis racket': 'palevilotered', 'airplane': 'lavenderblush', 'pizza': 'hotpink', 'umbrella': 'deeppink', 'bear': 'plum', 'fork': 'purple', 'laptop': 'indigo', 'vase': 'mediumpurple', 'baseball glove': 'slateblue', 'traffic light': 'mediumblue', 'bed': 'navy', 'broccoli': 'royalblue', 'backpack': 'slategray', 'snowboard': 'skyblue', 'kite': 'cadetblue', 'teddy bear': 'peacock', 'clock': 'lightcyan', 'wine glass': 'teal', 'frisbee': 'aquamarine', 'donut': 'mincream', 'suitcase': 'seagreen', 'dog': 'springgreen', 'banana': 'emeraldgreen', 'person': 'honeydew', 'surfboard': 'palegreen', 'cake': 'sapgreen', 'book': 'lawngreen', 'potted plant': 'greenyellow', 'toaster': 'ivory', 'stop sign': 'beige', 'couch': 'khaki'}

def forFrame(frame_number, output_array, output_count, returned_frame):

plt.clf()

this_colors = []

labels = []

sizes = []

counter = 0

for eachItem in output_count:

counter += 1

labels.append(eachItem + " = " + str(output_count[eachItem]))

sizes.append(output_count[eachItem])

this_colors.append(color_index[eachItem])

plt.subplot(1, 2, 1)

plt.title("Frame : " + str(frame_number))

plt.axis("off")

plt.imshow(returned_frame, interpolation="none")

plt.subplot(1, 2, 2)

plt.title("Analysis: " + str(frame_number))

plt.pie(sizes, labels=labels, colors=this_colors, shadow=True, startangle=140, autopct="%1.1f%%")

plt.pause(0.01)

- Run Matplotlib and the video detection process:

plt.show()

video_detector.detectObjectsFromVideo(input_file_path="Dataset/videos/street.mp4", output_file_path="output-video" , frames_per_second=20, per_frame_function=forFrame, minimum_percentage_probability=30, return_detected_frame=True, log_progress=True)

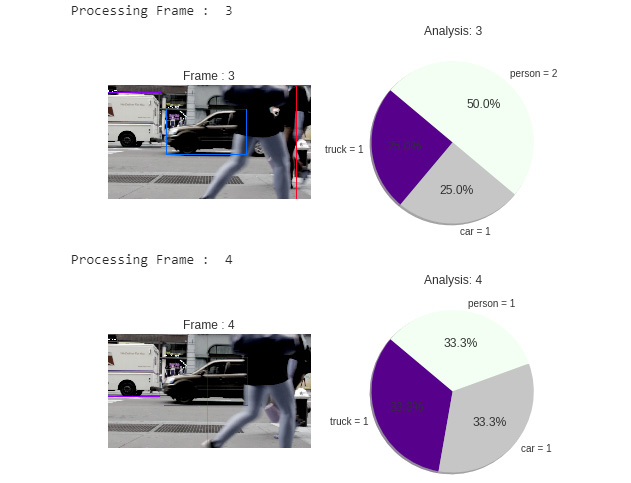

The output will be as shown in the following frames:

Figure 8.7: ImageAI video object detection output

As you can see, the model detects objects more or less properly. Now you can see the output video in your chapter 8 root directory with all the object detections in it.

Note:

There is an additional video added in the Dataset/videos folder – park.mp4. You can use the steps just mentioned and recognize objects in this video as well.