Chapter 5: Convolutional Neural Networks for Computer Vision

Activity 5: Making Use of Data Augmentation to Classify correctly Images of Flowers

Solution

- Open your Google Colab interface.

Note:

You will need to mount your drive using the Dataset folder, and accordingly set the path to continue ahead.

import numpyasnp

classes=['daisy','dandelion','rose','sunflower','tulip']

X=np.load("Dataset/flowers/%s_x.npy"%(classes[0]))

y=np.load("Dataset/flowers/%s_y.npy"%(classes[0]))

print(X.shape)

forflowerinclasses[1:]:

X_aux=np.load("Dataset/flowers/%s_x.npy"%(flower))

y_aux=np.load("Dataset/flowers/%s_y.npy"%(flower))

print(X_aux.shape)

X=np.concatenate((X,X_aux),axis=0)

y=np.concatenate((y,y_aux),axis=0)

print(X.shape)

print(y.shape)

- To output some samples from the dataset:

import random

random.seed(42)

from matplotlib import pyplot as plt

import cv2

for idx in range(5):

rnd_index = random.randint(0, 4000)

plt.subplot(1,5,idx+1),plt.imshow(cv2.cvtColor(X[rnd_index],cv2.COLOR_BGR2RGB))

plt.xticks([]),plt.yticks([])

plt.savefig("flowers_samples.jpg", bbox_inches='tight')

plt.show()

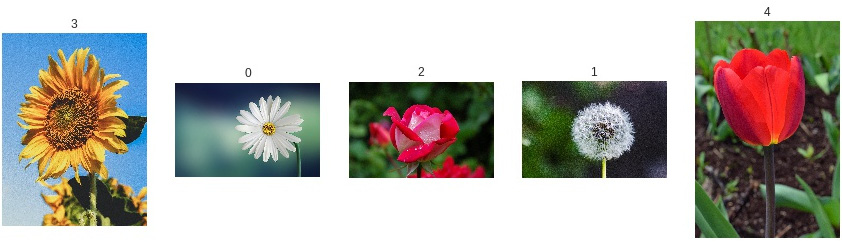

The output is as follows:

Figure 5.23: Samples from the dataset

- Now, we will normalize and perform one-hot encoding:

from keras import utils as np_utils

X = (X.astype(np.float32))/255.0

y = np_utils.to_categorical(y, len(classes))

print(X.shape)

print(y.shape)

- Splitting the training and testing set:

from sklearn.model_selection import train_test_split

x_train, x_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

input_shape = x_train.shape[1:]

print(x_train.shape)

print(y_train.shape)

print(x_test.shape)

print(y_test.shape)

print(input_shape)

- Import libraries and build the CNN:

from keras.models import Sequential

from keras.callbacks import ModelCheckpoint

from keras.layers import Input, Dense, Dropout, Flatten

from keras.layers import Conv2D, Activation, BatchNormalization

def CNN(input_shape):

model = Sequential()

model.add(Conv2D(32, kernel_size=(5, 5), padding='same', strides=(2,2), input_shape=input_shape))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.2))

model.add(Conv2D(64, kernel_size=(3, 3), padding='same', strides=(2,2)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.2))

model.add(Conv2D(128, kernel_size=(3, 3), padding='same', strides=(2,2)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.2))

model.add(Conv2D(256, kernel_size=(3, 3), padding='same', strides=(2,2)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.2))

model.add(Flatten())

model.add(Dense(512))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.5))

model.add(Dense(5, activation = "softmax"))

return model

- Declare ImageDataGenerator:

from keras.preprocessing.image import ImageDataGenerator

datagen = ImageDataGenerator(

rotation_range=10,

zoom_range = 0.2,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.1,

horizontal_flip=True

)

- We will now train the model:

datagen.fit(x_train)

model = CNN(input_shape)

model.compile(loss='categorical_crossentropy', optimizer='Adadelta', metrics=['accuracy'])

ckpt = ModelCheckpoint('Models/model_flowers.h5', save_best_only=True,monitor='val_loss', mode='min', save_weights_only=False)

//{…}##the detailed code can be found on Github##

model.fit_generator(datagen.flow(x_train, y_train,

batch_size=32),

epochs=200,

validation_data=(x_test, y_test),

callbacks=[ckpt],

steps_per_epoch=len(x_train) // 32,

workers=4)

- After which, we will evaluate the model:

from sklearn import metrics

model.load_weights('Models/model_flowers.h5')

y_pred = model.predict(x_test, batch_size=32, verbose=0)

y_pred = np.argmax(y_pred, axis=1)

y_test_aux = y_test.copy()

y_test_pred = list()

for i in y_test_aux:

y_test_pred.append(np.argmax(i))

//{…}

##the detailed code can be found on Github##

print (y_pred)

# Evaluate the prediction

accuracy = metrics.accuracy_score(y_test_pred, y_pred)

print('Acc: %.4f' % accuracy)

- The accuracy achieved is 91.68%.

- Try the model with unseen data:

classes = ['daisy','dandelion','rose','sunflower','tulip']

images = ['sunflower.jpg','daisy.jpg','rose.jpg','dandelion.jpg','tulip .jpg']

model.load_weights('Models/model_flowers.h5')

for number in range(len(images)):

imgLoaded = cv2.imread('Dataset/testing/%s'%(images[number]))

img = cv2.resize(imgLoaded, (150, 150))

img = (img.astype(np.float32))/255.0

img = img.reshape(1, 150, 150, 3)

plt.subplot(1,5,number+1),plt.imshow(cv2.cvtColor(imgLoaded,cv2.COLOR_BGR2RGB))

plt.title(np.argmax(model.predict(img)[0]))

plt.xticks([]),plt.yticks([])

plt.show()

Output will look like this:

Figure 5.24: Prediction of roses result from Activity05

Note:

The detailed code for this activity can be found on GitHub - https://github.com/PacktPublishing/Artificial-Vision-and-Language-Processing-for-Robotics/blob/master/Lesson05/Activity05/Activity05.ipynb