Chapter 2: Introduction to Computer Vision

Activity 2: Classify 10 Types of Clothes from the Fashion-MNIST Data

Solution

- Open up your Google Colab interface.

- Create a folder for the book, download the Dataset folder from GitHub, and upload it into the folder.

- Import the drive and mount it as follows:

from google.colab import drive

drive.mount('/content/drive')

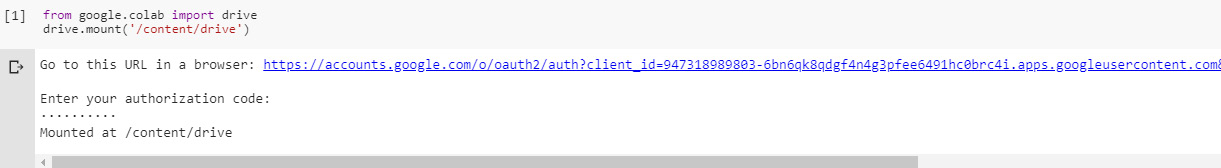

Once you have mounted your drive for the first time, you will have to enter the authorization code mentioned by clicking on the URL given by Google and pressing the Enter key on your keyboard:

Figure 2.38: Image displaying the Google Colab authorization step

- Now that you have mounted the drive, you need to set the path of the directory:

cd /content/drive/My Drive/C13550/Lesson02/Activity02/

- Load the dataset and show five samples:

from keras.datasets import fashion_mnist

(x_train, y_train), (x_test, y_test) = fashion_mnist.load_data()

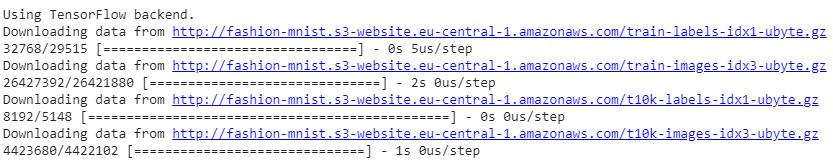

The output is as follows:

Figure 2.39: Loading datasets with five samples

import random

from sklearn import metrics

from sklearn.utils import shuffle

random.seed(42)

from matplotlib import pyplot as plt

for idx in range(5):

rnd_index = random.randint(0, 59999)

plt.subplot(1,5,idx+1),plt.imshow(x_train[idx],'gray')

plt.xticks([]),plt.yticks([])

plt.show()

Figure 2.40: Samples of images from the Fashion-MNIST dataset

- Preprocess the data:

import numpy as np

from keras import utils as np_utils

x_train = (x_train.astype(np.float32))/255.0

x_test = (x_test.astype(np.float32))/255.0

x_train = x_train.reshape(x_train.shape[0], 28, 28, 1)

x_test = x_test.reshape(x_test.shape[0], 28, 28, 1)

y_train = np_utils.to_categorical(y_train, 10)

y_test = np_utils.to_categorical(y_test, 10)

input_shape = x_train.shape[1:]

- Build the architecture of the neural network using Dense layers:

from keras.callbacks import EarlyStopping, ModelCheckpoint, ReduceLROnPlateau

from keras.layers import Input, Dense, Dropout, Flatten

from keras.preprocessing.image import ImageDataGenerator

from keras.layers import Conv2D, MaxPooling2D, Activation, BatchNormalization

from keras.models import Sequential, Model

from keras.optimizers import Adam, Adadelta

def DenseNN(inputh_shape):

model = Sequential()

model.add(Dense(128, input_shape=input_shape))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dropout(0.2))

model.add(Dense(128))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dropout(0.2))

model.add(Dense(64))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dropout(0.2))

model.add(Flatten())

model.add(Dense(64))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dropout(0.2))

model.add(Dense(10, activation="softmax"))

return model

model = DenseNN(input_shape)

Note:

The entire code file for this activity can be found on GitHub in the Lesson02 | Activity02 folder.

- Compile and train the model:

optimizer = Adadelta()

model.compile(loss='categorical_crossentropy', optimizer=optimizer, metrics=['accuracy'])

ckpt = ModelCheckpoint('model.h5', save_best_only=True,monitor='val_loss', mode='min', save_weights_only=False)

model.fit(x_train, y_train, batch_size=128, epochs=20, verbose=1, validation_data=(x_test, y_test), callbacks=[ckpt])

The accuracy obtained is 88.72%. This problem is harder to solve, so that's why we have achieved less accuracy than in the last exercise.

- Make the predictions:

import cv2

images = ['ankle-boot.jpg', 'bag.jpg', 'trousers.jpg', 't-shirt.jpg']

for number in range(len(images)):

imgLoaded = cv2.imread('Dataset/testing/%s'%(images[number]),0)

img = cv2.resize(imgLoaded, (28, 28))

img = np.invert(img)

cv2.imwrite('test.jpg',img)

img = (img.astype(np.float32))/255.0

img = img.reshape(1, 28, 28, 1)

plt.subplot(1,5,number+1),plt.imshow(imgLoaded,'gray')

plt.title(np.argmax(model.predict(img)[0]))

plt.xticks([]),plt.yticks([])

plt.show()

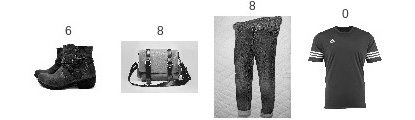

Output will look like this:

Figure 2.41: Prediction for clothes using Neural Networks

It has classified the bag and the t-shirt correctly, but it has failed to classify the boots and the trousers. These samples are very different from the ones that it was trained for.