Deploying the Spark application on a cluster

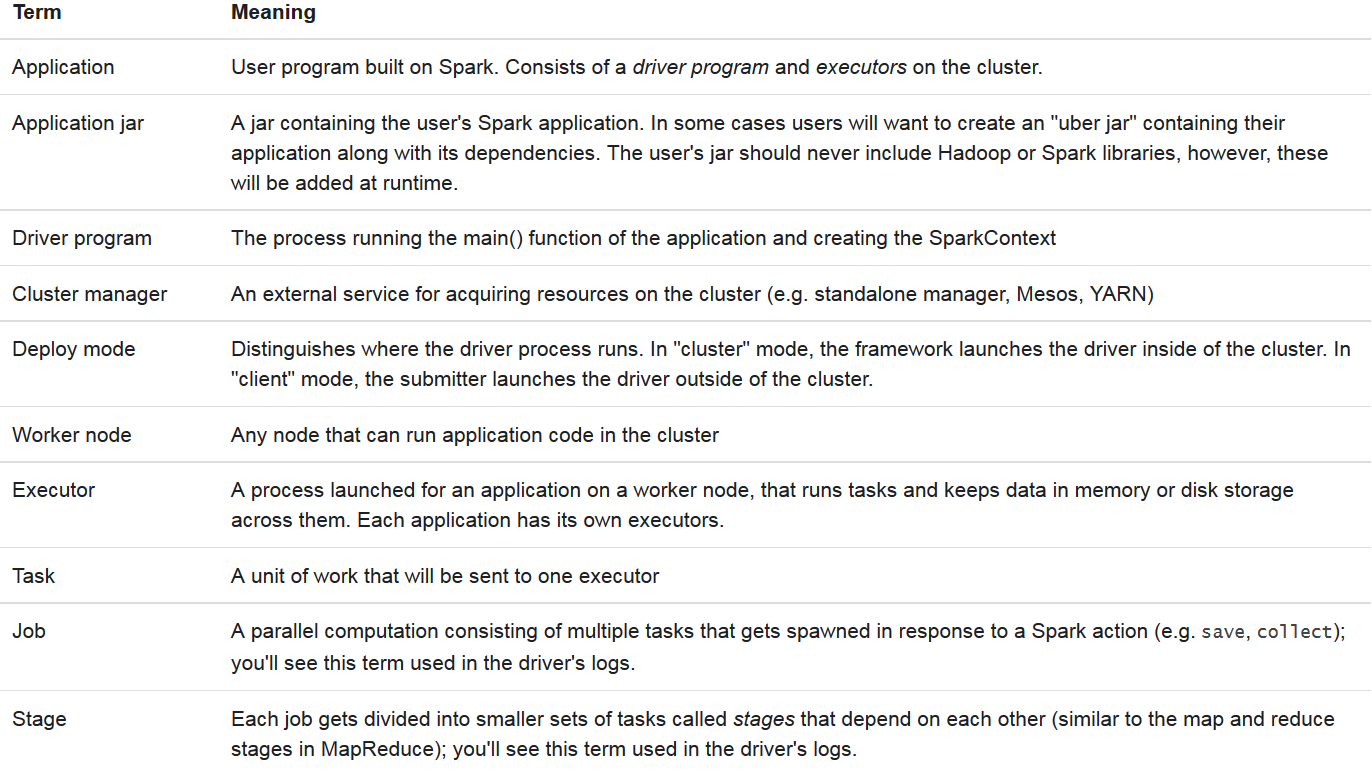

In this section, we will discuss how to deploy Spark jobs on a computing cluster. We will see how to deploy clusters in three deploy modes: standalone, YARN, and Mesos. The following figure summarizes terms that are needed to refer to cluster concepts in this chapter:

Figure 8: Terms that are needed to refer to cluster concepts (source: http://spark.apache.org/docs/latest/cluster-overview.html#glossary)

However, before diving onto deeper, we need to know how to submit a Spark job in general.

Submitting Spark jobs

Once a Spark is as either a jar file (written in Scala or Java) or a Python file, it can be submitted using the Spark-submit script located under the bin directory in Spark distribution (aka $SPARK_HOME/bin). According to the API documentation provided in Spark website (http://spark.apache.org/docs/latest/submitting-applications.html), the script takes care of the following:

- Setting up the classpath of

JAVA_HOME,SCALA_HOMEwith Spark - Setting...