Word2vec explained

First things first: word2vec does not represent a single algorithm but rather a family of algorithms that attempt to encode the semantic and syntactic meaning of words as a vector of N numbers (hence, word-to-vector = word2vec). We will explore each of these algorithms in depth in this chapter, while also giving you the opportunity to read/research other areas of vectorization of text, which you may find helpful.

What is a word vector?

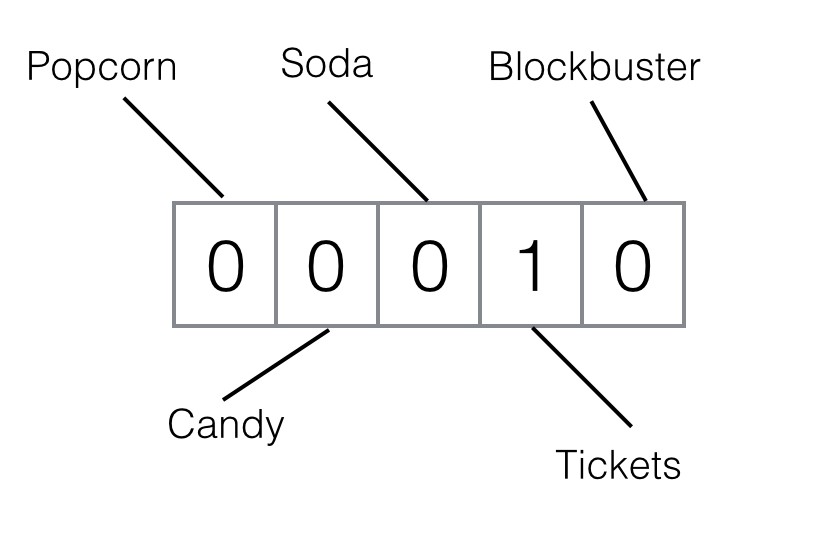

In its simplest form, a word vector is merely a one-hot-encoding, whereby every element in the vector represents a word in our vocabulary, and the given word is encoded with 1 while all the other words elements are encoded with 0. Suppose our vocabulary only has the following movie terms: Popcorn, Candy, Soda, Tickets, and Blockbuster.

Following the logic we just explained, we could encode the term Tickets as follows:

Using this simplistic form of encoding, which is what we do when we create a bag-of-words matrix, there is no meaningful comparison...