The combination of OSS, public cloud, and containerization gives a developer a virtually unlimited number of compute power combined with the ability of rapidly composing applications that deliver more than the sum of the individual parts. The individual parts that make up an application generally do only one thing, and do it well (take, for instance, the Unix philosophy).

The developer is now able to architect applications that are deployed as microservices. When done right, microservices, such as SOA, enable quick feedback during development, testing, and deployment. Microservices are not a free lunch and has various problems, which are listed in the 2014 article—Microservices - Not A Free Lunch! (you can read this article at http://highscalability.com/blog/2014/4/8/microservices-not-a-free-lunch.html). With the technologies we listed earlier, developers have the power of having their cake and eating it too, as more and more of the not free lunch part is available as managed services, such as AKS. The public cloud providers are competing by investing in managed services to become the go-to provider for developers.

Even with more managed services coming to relieve the burden on the developer and operator, developers need to know the underlying workings of these services to make effective use of them in production. Just as developers write automated tests, future developers will be expected to know how their application can be delivered quickly and reliably to the customer.

Operators will take the hints from the developer specs and deliver them a stable system – whose metrics can be used for future software development, thus completing the virtuous cycle.

Developers owning the responsibility of running the software that they develop instead of throwing it over the wall for operations is a change in mindset that has origins in Amazon (https://www.slideshare.net/ufried/the-truth-about-you-build-it-you-run-it).

The advantages of the DevOps model not only change the responsibilities of the operations and development teams—it also changes the business side of organizations. The foundation of DevOps can enable businesses to accelerate the time to market significantly if combined with a lean and agile process and operations model.

Microservices, Docker, and Kubernetes can get quickly overwhelming for anyone. We can make it easier for ourselves by understanding the basics. It would be an understatement to say that understanding the fundamentals is critical to performing root cause analysis on production problems.

Any application is ultimately a process that is running on an operating system (OS). The process requires the following to be usable:

- Compute (CPU)

- Memory (RAM)

- Storage (disk)

- Network (NIC)

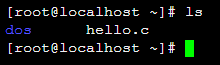

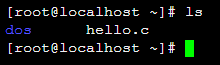

Launch the online Linux Terminal emulator at https://bellard.org/jslinux/vm.html?url=https://bellard.org/jslinux/buildroot-x86.cfg. Then, type the ls command, as follows:

This command lists the files in the current directory (which happens to be the root user's home directory).

Congratulations, you have launched your first container!

Well, obviously, this is not really the case. In principle, there is no difference between the command you ran versus launching a container.

So, what is the difference between the two? The clue lies in the word, contain. The ls process has very limited containment (it is limited only by the rights that the user has). ls can potentially see all files, has access to all the memory, network, and the CPU that's available to the OS.

A container is contained by the OS by controlling access to computing, memory, storage, and network. Each container runs in its own namespace(https://medium.com/@teddyking/linux-namespaces-850489d3ccf). The rights of the namespace processes are controlled by control groups (cgroups).

Every container process has contained access via cgroups and namespaces, which makes it look (from the container process perspective) as if it is running as a complete instance of an OS. This means that it appears to have its own root filesystem, init process (PID 1), memory, compute, and network.

Since running containers is just a set of processes, it makes it extremely lightweight and fast, and all the tools that is used to debug and monitor processes can be used out of the box.

You can play with Docker by creating a free Docker Hub account at Docker Hub (https://hub.docker.com/) and using that login at play with Docker (https://labs.play-with-docker.com/).

First, type docker run -it ubuntu. After a short period of time, you will get a prompt such as root@<randomhexnumber>:/#. Next, type exit, and run the docker run -it ubuntu command again. You will notice that it is super fast! Even though you have launched a completely new instance of Ubuntu (on a host that is probably running alpine OS), it is available instantly. This magic is, of course, due to the fact that containers are nothing but regular processes on the OS. Finally, type exit to complete this exercise. The full interaction of the session on play with Docker (https://labs.play-with-docker.com/) is shown in the following script for your reference. It demonstrates the commands and their output:

docker run -it ubuntu # runs the standard ubuntu linux distribution as a container

exit # the above command after pulling it from dockerhub will put you into the shell of the container. exit command gets you out of the container

docker run -it ubuntu # running it again shows you how fast launching a container is. (Compare it to launching a full blown Virtual Machine (VM), booting a computer)

exit # same as above, gets you out of the container

The following content displays the output that is produced after implementing the preceding commands:

###############################################################

# WARNING!!!! #

# This is a sandbox environment. Using personal credentials #

# is HIGHLY! discouraged. Any consequences of doing so are #

# completely the user's responsibilites. #

# #

# The PWD team. #

###############################################################

[node1] (local) [email protected] ~

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[node1] (local) [email protected] ~

$ date

Mon Oct 29 05:58:25 UTC 2018

[node1] (local) [email protected] ~

$ docker run -it ubuntu

Unable to find image 'ubuntu:latest' locally

latest: Pulling from library/ubuntu

473ede7ed136: Pull complete

c46b5fa4d940: Pull complete

93ae3df89c92: Pull complete

6b1eed27cade: Pull complete

Digest: sha256:29934af957c53004d7fb6340139880d23fb1952505a15d69a03af0d1418878cb

Status: Downloaded newer image for ubuntu:latest

root@03c373cb2eb8:/# exit

exit

[node1] (local) [email protected] ~

$ date

Mon Oct 29 05:58:41 UTC 2018

[node1] (local) [email protected] ~

$ docker run -it ubuntu

root@4774cbe26ad7:/# exit

exit

[node1] (local) [email protected] ~

$ date

Mon Oct 29 05:58:52 UTC 2018

[node1] (local) [email protected] ~

An individual can rarely perform useful work alone; teams that communicate securely and well can generally accomplish more.

Just like people, containers need to talk to each other and they need help in organizing their work. This activity is called orchestration.

The current leading orchestration framework is Kubernetes (https://kubernetes.io/). Kubernetes was inspired by the Borg project in Google, which, by itself, was running millions of containers in production. Incidentally, cgroups' initial contribution came from Google developers.

Kubernetes takes the declarative approach to orchestration; that is, you specify what you need and Kubernetes takes care of the rest.

Underlying all this magic, Kubernetes still launches the Docker containers, like you did previously. The extra work involves details such as networking, attaching persistent storage, handling the container, and host failures.

Remember, everything is a process!