A few days ago, Alibaba Cloud announced the release of Mars, its tensor-based framework for large-scale data computation. Mars tensor provides a familiar interface like Numpy, which is a popular tool for most of the Python users such as mathematicians, engineers, etc. and the ones working in core scientific computing. Mars can also scale into a single machine, and scale out to a cluster with hundreds of machines.

Users can simply install Mars tensor with the following code:

import mars.tensor as mta = mt.random.rand(1000, 2000)(a + 1).sum(axis=1).execute()

According to a Medium post by Synced, “Mars can simply tile a large tensor into small chunks and describe the inner computation with a directed graph, enabling the running of parallel computation on a wide range of distributed environments, from a single machine to a cluster comprising thousands of machines.”

Xuye Qin, Alibaba Cloud Senior Engineer, bragged about Mars’ performance by stating, “Mars can complete the computation on a 2.25T-size matrix and a 2.25T-size matrix multiplication in two hours.”

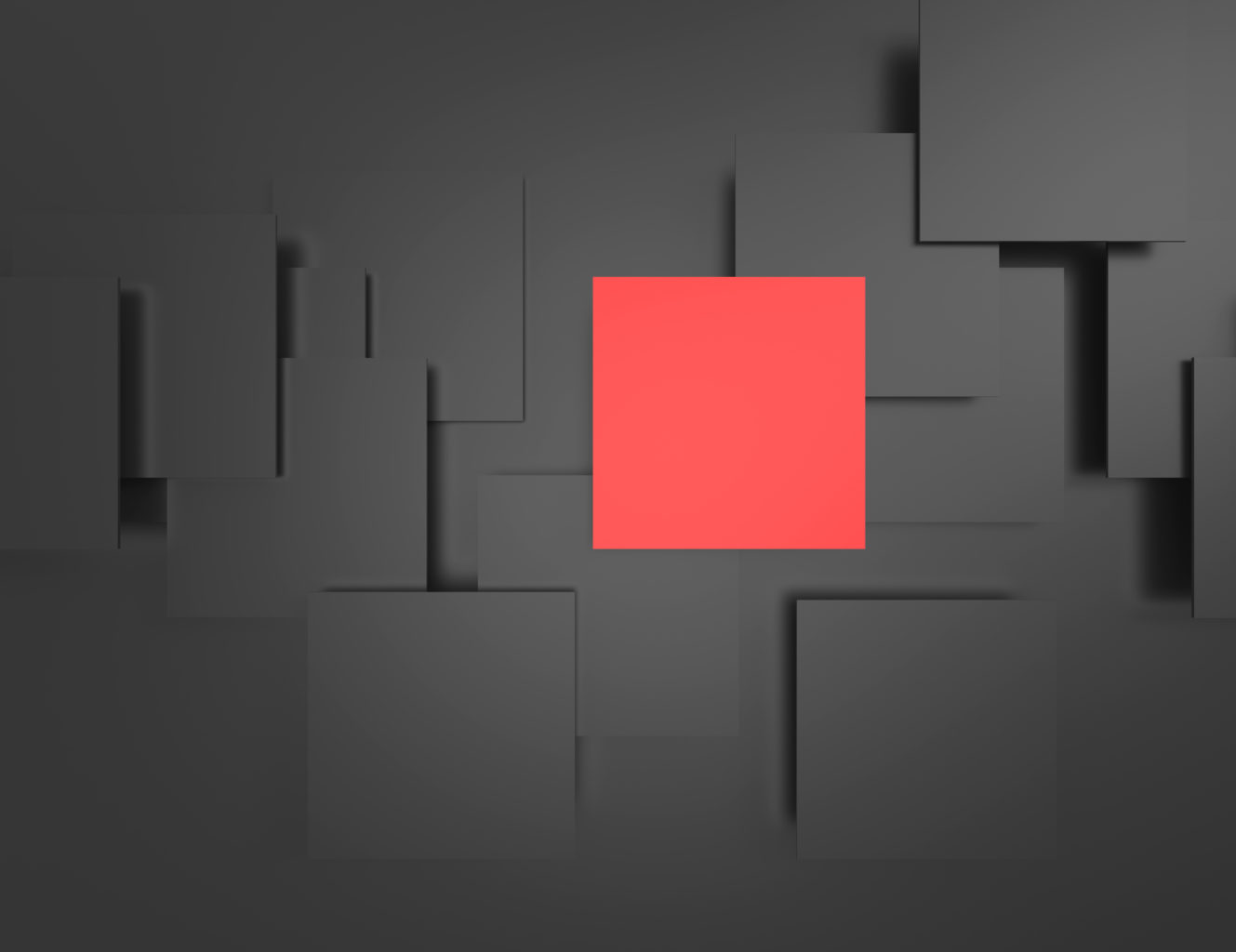

Unlike NumPy, Mars provides users with the ability to run matrix computation at a very large-scale. Alibaba developers carried out a simple experiment to test Mars’ performance. According to the graph below where NumPy (represented by a red cross at the upper left) lags far behind Mars tensors, which is successful in achieving ideal performance values.

Source: Medium

Mars supports a subset of NumPy interfaces, which include:

Arithmetic and mathematics: +, -, *, /, exp, log, etc.

Reduction along axes (sum, max, argmax, etc).

Most of the array creation routines (empty, ones_like, diag, etc). Mars not only supports create array/tensor on GPU, but also supports create sparse tensor.

Most of the array manipulation routines such as reshape, rollaxis, concatenate, etc.

Basic indexing (indexing by ints, slices, newaxes, and Ellipsis)

Fancy indexing along a single axis with lists or NumPy arrays, e.g. x[[1, 4, 8],:5]

Universal functions for elementwise operations.

Linear algebra functions including product (dot, matmul, etc.) and decomposition (cholesky, svd, etc.).

To know more about Mars in detail, visit its official GitHub page.

NumPy drops Python 2 support. Now you need Python 3.5 or later

Google researchers introduce JAX: A TensorFlow-like framework for generating high-performance code from Python and NumPy machine learning programs

Introducing numpywren, a system for linear algebra built on a serverless architecture

Read more

United States

United States

Great Britain

Great Britain

India

India

Germany

Germany

France

France

Canada

Canada

Russia

Russia

Spain

Spain

Brazil

Brazil

Australia

Australia

South Africa

South Africa

Thailand

Thailand

Ukraine

Ukraine

Switzerland

Switzerland

Slovakia

Slovakia

Luxembourg

Luxembourg

Hungary

Hungary

Romania

Romania

Denmark

Denmark

Ireland

Ireland

Estonia

Estonia

Belgium

Belgium

Italy

Italy

Finland

Finland

Cyprus

Cyprus

Lithuania

Lithuania

Latvia

Latvia

Malta

Malta

Netherlands

Netherlands

Portugal

Portugal

Slovenia

Slovenia

Sweden

Sweden

Argentina

Argentina

Colombia

Colombia

Ecuador

Ecuador

Indonesia

Indonesia

Mexico

Mexico

New Zealand

New Zealand

Norway

Norway

South Korea

South Korea

Taiwan

Taiwan

Turkey

Turkey

Czechia

Czechia

Austria

Austria

Greece

Greece

Isle of Man

Isle of Man

Bulgaria

Bulgaria

Japan

Japan

Philippines

Philippines

Poland

Poland

Singapore

Singapore

Egypt

Egypt

Chile

Chile

Malaysia

Malaysia