Researchers from Virginia Tech, Chen Gao, Yuliang Zou, and Jia-Bin Huang, recently published a paper on ‘iCAN: Instance-Centric Attention Network for Human-Object Interaction Detection.’ In it, they propose an ‘instance-centric attention module’ (iCAN) for human-object interaction detection. This module uses an incredibly fast regional convolutional neural network (R-CNN), which, in turn, is much more effective in identifying and understanding the human-object interaction.

In order to understand the situation in a scene or an image, computers need to recognize how humans interact with surrounding objects. This can be done using human-object interaction, localizes a person and an object, and then well as identifies the relationship - or interaction - between them.

The core idea of this research is that an image of a person or an object contains informational cues on the most relevant parts of an image for an algorithm to attend to - this means making predictions should be easier.

To exploit this cue, researchers propose an instance-centric attention module that learns to dynamically highlight regions in an image conditioned on the appearance of each instance. Thus, this network allows to selectively aggregate features relevant for recognizing human-object interactions. The researchers validated the efficacy of the proposed network using the COCO and HICO-DET datasets and showed that this approach compares favorably with the state-of-the-art.

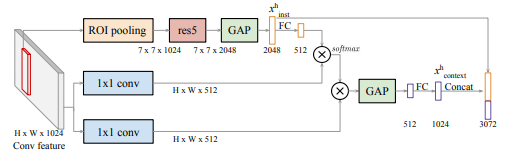

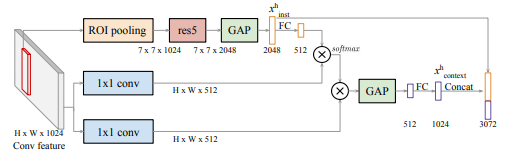

iCAN module

Highlights of the iCAN paper include:

- The researchers have introduced an instance-centric attention module that allows the network to dynamically highlight informative regions for improving HOI detection.

- They have also established a new state-of-the-art performance on two large-scale HOI benchmark datasets.

- They conducted a detailed ablation study and error analysis to identify the relative contributions of the individual components and quantify different types of errors.

Unlock access to the largest independent learning library in Tech for FREE!

Get unlimited access to 7500+ expert-authored eBooks and video courses covering every tech area you can think of.

Renews at $15.99/month. Cancel anytime

- They also released the source code and pre-trained models to facilitate future research.

Advantages of the iCAN module

- Unlike hand-designed contextual features based on pose, the entire image, or secondary regions, iCAN’s attention map is automatically learned and jointly trained with the rest of the networks for improving the performance.

- On comparing with attention modules designed for image-level classification, the instance-centric attention map provides greater flexibility as it allows attending to different regions in an image depending on different object instances.

To know about iCAN in detail head on to the research paper.

Build intelligent interfaces with CoreML using a CNN [Tutorial]

CapsNet: Are Capsule networks the antidote for CNNs kryptonite?

A new Stanford artificial intelligence camera uses a hybrid optical-electronic CNN for rapid decision making

United States

United States

Great Britain

Great Britain

India

India

Germany

Germany

France

France

Canada

Canada

Russia

Russia

Spain

Spain

Brazil

Brazil

Australia

Australia

South Africa

South Africa

Thailand

Thailand

Ukraine

Ukraine

Switzerland

Switzerland

Slovakia

Slovakia

Luxembourg

Luxembourg

Hungary

Hungary

Romania

Romania

Denmark

Denmark

Ireland

Ireland

Estonia

Estonia

Belgium

Belgium

Italy

Italy

Finland

Finland

Cyprus

Cyprus

Lithuania

Lithuania

Latvia

Latvia

Malta

Malta

Netherlands

Netherlands

Portugal

Portugal

Slovenia

Slovenia

Sweden

Sweden

Argentina

Argentina

Colombia

Colombia

Ecuador

Ecuador

Indonesia

Indonesia

Mexico

Mexico

New Zealand

New Zealand

Norway

Norway

South Korea

South Korea

Taiwan

Taiwan

Turkey

Turkey

Czechia

Czechia

Austria

Austria

Greece

Greece

Isle of Man

Isle of Man

Bulgaria

Bulgaria

Japan

Japan

Philippines

Philippines

Poland

Poland

Singapore

Singapore

Egypt

Egypt

Chile

Chile

Malaysia

Malaysia