Long short-term memory – LSTM 101

Long Short-Term Memory (LSTM), models are a special case of RNNs, Recurrent Neural Networks. A full, rigorous description of them is out of the scope of this book; in this section, we will just provide the essence of them.

Note

You can have a look at the following books published by Packt:https://www.packtpub.com/big-data-and-business-intelligence/neural-network-programming-tensorflowAlso, you can have a look at this: https://www.packtpub.com/big-data-and-business-intelligence/neural-networks-r

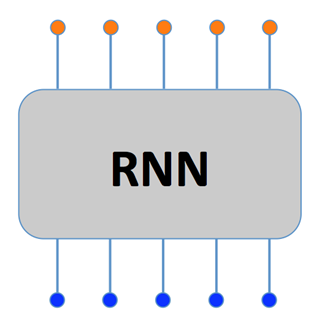

Simply speaking, RNN works on sequences: they accept multidimensional signals as input, and they produce a multidimensional output signal. In the following figure, there's an example of an RNN able to cope with a timeseries of five-time steps (one input for each time step). The inputs are in the bottom part of the RNN, with the outputs in the top. Remember that each input/output is an N-dimensional feature:

Inside, an RNN has multiple stages; each stage is connected to...