This article by Rich Helton, the author of Learning NServiceBus Sagas, delves into the details of NSB and its security.

In this article, we will cover the following:

- Introducing web security

- Cloud vendors

- Using .NET 4

- Adding NServiceBus

- Benefits of NSB

(For more resources related to this topic, see here.)

Introducing web security

According to the Top 10 list of 2013 by the Open Web Application Security Project (OWASP), found at https://www.owasp.org/index.php/Top10#OWASP_Top_10_for_2013, injection flaws still remain at the top among the ways to penetrate a web site. This is shown in the following screenshot:

An injection flaw is a means of being able to access information or the site by injecting data into the input fields. This is normally used to bypass proper authentication and authorization. Normally, this is the data that the website has not seen in the testing efforts or considered during development. For references, I will consider some slides found at http://www.slideshare.net/rhelton_1/cweb-sec-oct27-2010-final. An instance of an injection flaw is to put SQL commands in form fields and even URL fields to try to get SQL errors and returns with further information. If the error is not generic, and a SQL exception occurs, it will sometimes return with table names. It may deny authorization for sa under the password table in SQL Server 2008. Knowing this gives a person knowledge of the SQL Server version, the sa user is being used, and the existence of a password table.

There are many tools and websites for people on the Internet to practice their web security testing skills, rather than them literally being in IT security as a professional or amateur. Many of these websites are well-known and posted at places such as https://www.owasp.org/index.php/Phoenix/Tools.

General disclaimer

I do not endorse or encourage others to practice on websites without written permission from the website owner.

Some of the live sites are as follows, and most are used to test web scanners:

There are many more sites and tools, and one would have to research them themselves. There are tools that will only look for SQL Injection. Hacking professionals who are very gifted and spend their days looking for only SQL injection would find these useful.

We will start with SQL injection, as it is one of the most popular ways to enter a website. But before we start an analysis report on a website hack, we will document the website. Our target site will be http://zero.webappsecurity.com/. We will start with the EC-Council's Certified Ethical Hacker program, where they divide footprinting and scanning into seven basic steps:

- Information gathering

- Determining the network range

- Identifying active machines

- Finding open ports and access points

- OS fingerprinting

- Fingerprinting services

- Mapping the network

We could also follow the OWASP Web Testing checklist, which includes:

- Information gathering

- Configuration testing

- Identity management testing

- Authentication testing

- Session management testing

- Data validation testing

- Error handling

- Cryptography

- Business logic testing

- Client-side testing

The idea is to gather as much information on the website as possible before launching an attack, as there is no information gathered so far. To gather information on the website, you don't actually have to scan the website yourself at the start. There are many scanners that scan the website before you start. There are Google Bots gathering search information about the site, the Netcraft search engine gathering statistics about the site, as well as many domain search engines with contact information. If another person has hacked the site, there are sites and blogs where hackers talk about hacking a specific site, including what tools they used. They may even post security scans on the Internet, which could be found by googling. There is even a site (https://archive.org/) that is called the WayBack Machine as it keeps previous versions of websites that it scans for in archive.

These are just some basic pieces, and any person who has studied for their Certified Ethical Hacker's exam should have all of this on their fingertips. We will discuss some of the benefits that Microsoft and Particular.net have taken into consideration to assist those who develop solutions in C#.

We can search at http://web.archive.org/web/ or http://zero.webappsecurity.com/ for changes from the WayBack Machine, and we will see something like this:

From this search engine, we look at what the screens looked like 2003, and walk through various changes to the present 2014. Actually, there were errors on archive copying the site in 2003, so this machine directed us to the first best copy on May 11, 2006, as shown in the following screenshot:

Looking with Netcraft, we can see that it was first started in 2004, last rebooted in 2014, and is running Ubuntu, as shown in this screenshot:

Next, we can try to see what Google tells us. There are many Google Hacking Databases that keep track of keywords in the Google Search Engine API. These keywords are expressions such as file: passwd to search for password files in Ubuntu, and many more. This is not a hacking book, and this site is well-known, so we will just search for webappsecurity.com file:passwd. This gives me more information than needed. On the first item, I get a sample web scan report of the available vulnerabilities in the site from 2008, as shown in the following screenshot:

We can also see which links Google has already found by running http://zero.webappsecurity.com/, as shown in this screenshot:

Unlock access to the largest independent learning library in Tech for FREE!

Get unlimited access to 7500+ expert-authored eBooks and video courses covering every tech area you can think of.

Renews at £15.99/month. Cancel anytime

In these few steps, I have enough information to bring a targeted website attack to check whether these vulnerabilities are still active or not. I know the operating system of the website and have details of the history of the website. This is before I have even considered running tools to approach the website. To scan the website, for which permission is always needed ahead of time, there are multiple web scanners available. For a list of web scanners, one website is http://sectools.org/tag/web-scanners/. One of the favorites is built by the famed Googler Michal Zalewski, and is called skipfish. Skipfish is an open source tool written in the C language, and it can be used in Windows by compiling it in Cygwin libraries, which are Linux virtual libraries and tools for Windows. Skipfish has its own man pages at http://dev.man-online.org/man1/skipfish/, and it can be downloaded from https://code.google.com/p/skipfish/. Skipfish performs web crawling, fuzzing, and tests for many issues such as XSS and SQL Injection. In Skipfish's case, its fussing uses dictionaries to add more paths to websites, extensions, and keywords that are normally found as attack vectors through the experience of hackers, to apply to the website being scanned. For instance, it may not be apparent from the pages being scanned that there is an admin/index.html page available, but the dictionary will try to check whether the page is available. Skipfish results will appear as follows:

The issue with Skipfish is that it is noisy, because of its fuzzer. Skipfish will try many scans and checks for links that might not exist, which will take some time and can be a little noisy out of the box. There are many configurations, and there is throttling of the scanning to try to hide the noise.

An associated scan in HP's WebInspect scanner will appear like this:

These are just automated means to inspect a website. These steps are common, and much of this material is known in web security. After an initial inspection of a website, a person may start making decisions on how to check their information further.

Manually checking websites

An experienced web security person may now start proceeding through more manual checks and less automated checking of websites after taking an initial look at the website. For instance, type Admin as the user ID and password, or type Guest instead of Admin, and the list progresses based on experience. Then try the Admin and password combination, then the Admin and password123 combination, and so on. A person inspecting a website might have a lot of time to try to perform penetration testing, and might try hundreds of scenarios. There are many tools and scripts to automate the process. As security analysts, we find many sites that give admin access just by using Admin and Admin as the user ID and password, respectively.

To enhance personal skills, there are many tutorials to walk through. One thing to do is to pull down a live website that you can set up for practice, such as WebGoat, and go through the steps outlined in the tutorials from sites such as http://webappsecmovies.sourceforge.net/webgoat/. These sites will show a person how to perform SQL Injection testing through the WebGoat site. As part of these tutorials, there are plugins of Firefox to test security scripts, HTML, debug pieces and tamper with the website through the browser, as shown in this screenshot:

Using .NET 4 can help

Every page that is deployed to the Internet (and in many cases, the Intranet as well), constantly gets probed and prodded by scans, viruses, and network noise. There are so many pokes, probes, and prods on networks these days that most of them are seen as noise.

By default, .NET 4 offers some validation and out-of-the-box support for Web requests. Using .NET 4, you may discover that some input types such as double quotes, single quotes, and even < are blocked in some form fields. You will get an error like what is shown in the following screenshot when trying to pass some of the values:

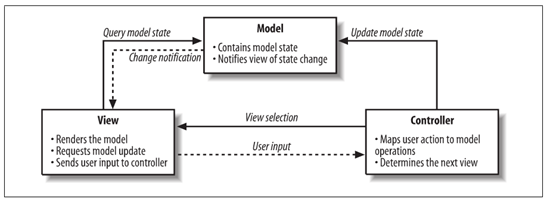

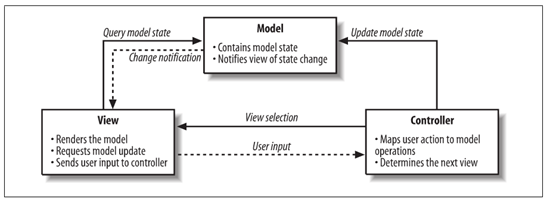

This is very basic validation, and it will reside in the .NET version 4 framework's pooling pieces of Internet Information Services (IIS) for Windows. To further offer security following Microsoft's best enterprise practices, we may also consider using Model-View-Controller (MVC) and Entity Frameworks (EF). To get this information, we can review Microsoft Application Architecture Guide at http://msdn.microsoft.com/en-us/library/ff650706.aspx. The MVC design pattern is the most commonly used pattern in software and is designed as follows:

This is a very common design pattern, so why is this important in security? What is helpful is that we can validate data requests and responses through the controllers, as well as provide data annotations for each data element for more validation. A common attack that appeared through viruses through the years is the buffer overflow. A buffer overflow is used to send a lot of data to the data elements. Validation can check whether there is sufficient data to counteract the buffer overflow.

EF is a Microsoft framework used to provide an object-relationship mapper. Not only can it easily generate objects to and from the SQL Server through Visual Studio, but it can also use objects instead of SQL scripting. Since it does not use SQL, SQL Injection, which is an attack involving injecting SQL commands through input fields, can be counteracted.

Even though some of these techniques will help mitigate many attack vectors, the gateway to backend processes is usually through the website. There are many more injection attack vectors. If stored procedures are used for SQL Server, a scan be tried to access any stored procedures that the website may be calling, as well as for any default stored procedures that may be lingering from default installations from SQL Server. So how do we add further validation and decouple the backend processes in an organization from the website?

NServiceBus to the rescue

NServiceBus is the most popular C# platform framework used to implement an Enterprise Service Bus (ESB) for service-oriented architecture (SOA). Basically, NSB hosts Windows services through its NServiceBus.Host.exe program, and interfaces these services through different message queuing components.

A C# MVC-EF program can call web services directly, and when the web service receives an error, the website will receive the error directly in the MVC program. This creates a coupling of the web service and the website, where changes in the website can affect the web services and actions in the web services can affect the website. Because of this coupling, websites may have a Please do not refresh the page until the process is finished warning. Normally, it is wise to step away from the phone, tablet, or computer until the website is loaded. It could be that even though you may not touch the website, another process running on the machine may. A virus scanner, update, or multiple other processes running on the device could cause any glitch in the refreshing of anything on the device. With all the scans that could be happening on a website and that others on the Internet could be doing, it seems quite odd that a page would say Please don't' touch me, I am busy.

In order to decouple the website from the web services, a service needs to be deployed between the website and web service. It helps if that service has a lot of out-of-the-box security features as well, to help protect the interaction between the website and web service. For this reason, a product such as NServiceBus is most helpful, where others have already laid the groundwork to have advanced security features in services tested through the industry by their use. Being the most common C# ESB platform has its advantages, as developers and architects ensure the integrity of the framework well before a new design starts using it.

Benefits of NSB

NSB provides many components needed for automation that are only found in ESBs. ESBs provide the following:

- Separation of duties: There is separation of duties from the frontend to the backend, allowing the frontend to fire a message to a service and continue in its processing, and not worrying about the results until it needs an update. Also, separation of workflow responsibility exists through separating out NSB services. One service could be used to send payments to a bank, and another service could be used to provide feedback of the current status of payment to the MVC-EF database so that a user may see their payment status.

- Message durability: Messages are saved in queues between services so that in case services are stopped, they can start from the messages in the queues when they restart, and the messages will persist until told otherwise.

- Workflow retries: Messages, or endpoints, can be told to retry a number of times until they completely fail and send an error. The error is automated to return to an error queue. For instance, a web service message can be sent to a bank, and it can be set to retry the web service every 5 minutes for 20 minutes before giving up completely. This is useful during any network or server issues.

- Monitoring: NSB ServicePulse can keep a heartbeat on its services. Other monitoring can easily be done on the NSB queues to report on the number of messages.

- Encryption: Messages between services and endpoints can be easily encrypted.

- High availability: Multiple services or subscribers could be processing the same or similar messages from various services that are living on different servers. When one server or service goes down, others could be made available to take over those that are already running.

Summary

If any website is on the Internet, it is being scanned by a multitude of means, from websites and others. It is wise to decouple external websites from backend processes through a means such as NServiceBus. Websites that are not decoupled from the backend can be acted upon by the external processes that it may be accomplishing, such as a web service to validate a credit card. These websites may say Do not refresh this page. Other conditions might occur to the website and be beyond your reach, refreshing the page to affect that interaction. The best solution is to decouple the website from these processes through NServiceBus.

Resources for Article:

Further resources on this subject:

United States

United States

Great Britain

Great Britain

India

India

Germany

Germany

France

France

Canada

Canada

Spain

Spain

Brazil

Brazil

Australia

Australia

South Africa

South Africa

Thailand

Thailand

Switzerland

Switzerland

Slovakia

Slovakia

Luxembourg

Luxembourg

Hungary

Hungary

Romania

Romania

Denmark

Denmark

Ireland

Ireland

Estonia

Estonia

Belgium

Belgium

Italy

Italy

Finland

Finland

Cyprus

Cyprus

Lithuania

Lithuania

Latvia

Latvia

Malta

Malta

Netherlands

Netherlands

Portugal

Portugal

Slovenia

Slovenia

Sweden

Sweden

Argentina

Argentina

Colombia

Colombia

Ecuador

Ecuador

Indonesia

Indonesia

Mexico

Mexico

New Zealand

New Zealand

Norway

Norway

South Korea

South Korea

Taiwan

Taiwan

Turkey

Turkey

Czechia

Czechia

Austria

Austria

Greece

Greece

Isle of Man

Isle of Man

Bulgaria

Bulgaria

Japan

Japan

Philippines

Philippines

Poland

Poland

Singapore

Singapore

Egypt

Egypt

Chile

Chile

Malaysia

Malaysia