(For more resources related to this topic, see here.)

Getting ready

The first thing we need to do is to download the latest version of Sqoop from following location http://www.apache.org/dist/sqoop/ and extract it on your machine. Now I am calling the Sqoop installation dir as $SQOOP_HOME.

Given here are the prerequisites for Sqoop import process.

-

Installed and running Relational Database Management System (MySQL).

-

Installed and running Hadoop Cluster.

-

Set $HADOOP_HOME environment variable.

Following are the common arguments of import process.

|

Parameters

|

Description

|

|

--connect <jdbc-uri>

|

This command specifies the server or database to connect. It also specifies the port.

Example:

--connect jdbc:mysql://host:port/databaseName

|

|

--connection-manager <class-name>

|

Specify connection manager class name.

|

|

--driver <class-name>

|

Specify the fully qualified name of JDBC driver class.

|

|

--password <password>

|

Set authentication password required to connect to input source.

|

|

--username <username>

|

Set authentication username.

|

How to do it

Let’s see how to work with import process

First, we will start with import single RDBMS table into Hadoop.

Query1:

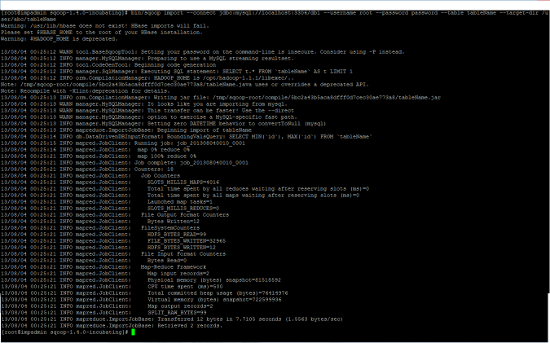

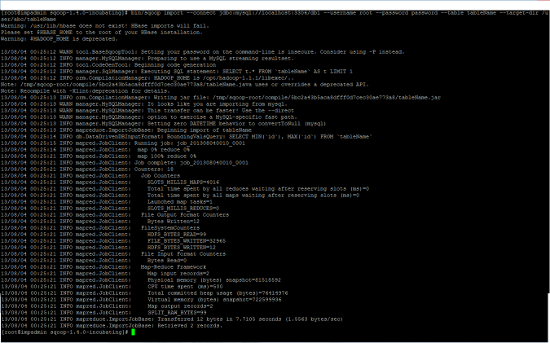

$ bin/sqoop import --connect jdbc:mysql://localhost:3306/db1

--username root --password password --table tableName

--target-dir /user/abc/tableName

The content of output file in HDFS will look like:

Next, we will put some light on approach of import only selected rows and selected columns of RDBMS table into Hadoop.

Query 2: Import selected columns

$ bin/sqoop import --connect jdbc:mysql://localhost:3306/db1

--username root --password password --table student --target-

dir /user/abc/student --columns “student_id,address,name”

Query 3: Import selected rows.

$ bin/sqoop import --connect jdbc:mysql://localhost:3306/db1

--username root --password password --table student --target-

dir /user/abc/student --where ‘student_id<100’

Query 4: Import selected columns of selected rows.

$ bin/sqoop import --connect jdbc:mysql://localhost:3306/db1

--username root --password password --table student --target-

dir /user/abc/student --columns “student_id,address,name”

-- where ‘student_id<100’

Unlock access to the largest independent learning library in Tech for FREE!

Get unlimited access to 7500+ expert-authored eBooks and video courses covering every tech area you can think of.

Renews at €14.99/month. Cancel anytime

How it works…

Now let’s see how the above steps work:

Import single table:

Apart from the common arguments of import process, as explained previously, this part covers some other arguments which are required to import a table into Hadoop Distributed File System.

|

Parameters

|

Description

|

|

--table <table-name>

|

Name of input table to fetch.

|

|

--target-dir<dir>

|

Location of output/target dir in HDFS.

|

|

--direct

|

If user want to use non-JDBC based access mechanism for faster database access

|

|

--options-file <file-path>

|

All the command line options that are common in most of commands can put in options file for convenience.

|

The Query1 will run a MapReduce job and import all the rows of given table to HDFS (where, /user/abc/tableName is the location of output files). The records imported in HDFS preserve their original columns order, which means, if input table contains four columns A, B, C and D, then content in HDFS file will look like:

A1, B1, C1, D1

A2, B2, C2, D2

Import selected columns:

By default, the import query will select all columns of input table for import, but we can select the subset of columns by specifying the comma separated list of columns in --columns argument.

The Query2 will only fetch three columns (student_id, address and name) of student table. If import query contains the --columns argument, then the order of column in output files are same as order specified in --columns argument. The output in HDFS will look like:

student_id, address, name

1, Delhi, XYZ

2, Mumbai, PQR

..........

If the input query contains the column in following order -- “address, name, student_id”, then the output in HDFS will look like.

address, name, student_id

Delhi, XYZ, 1

Mumbai, PQR, 2

.............

Import selected rows:

By default, all the rows of input table will be imported to HDFS, but we can control which rows need to be import by using a --where argument in the import statement.

The Query3 will import only those rows into HDFS which has value of “student_id” column greater than 100.

The Query4 use both --columns and --where arguments in one statement. For Query4, Sqoop will internally generates the query of the form “select student_id, address, name from student where student_id<100”.

There’s more...

This section covers some more examples of import process.

Import all tables:

So far we have imported a single table into HDFS, this section introduces an import-all-tables tool, by which we can import a set of tables from an RDBMS to HDFS. The import-all-tables tool creates a separate directory in HDFS for each RDBMS table. The following are the mandatory conditions for import-all-tables tool:

All tables must have a single primary key column. User must intend to import all the columns of each table. No --where, --columns and --query arguments are permitted.

Example:

Query 5:

$ bin/sqoop import-all-tables --connect jdbc:mysql://localhost:3306/db1

--username root --password password

This query will import all tables (tableName and tableName1) of database db1 into HDFS.

Output directories in HDFS look like:

Summary

We learned a lot in this article, about import single RDBMS table into HDFS, import selected columns and selected rows, and import set of RDBMS tables.

Resources for Article :

Further resources on this subject:

United States

United States

Great Britain

Great Britain

India

India

Germany

Germany

France

France

Canada

Canada

Russia

Russia

Spain

Spain

Brazil

Brazil

Australia

Australia

South Africa

South Africa

Thailand

Thailand

Ukraine

Ukraine

Switzerland

Switzerland

Slovakia

Slovakia

Luxembourg

Luxembourg

Hungary

Hungary

Romania

Romania

Denmark

Denmark

Ireland

Ireland

Estonia

Estonia

Belgium

Belgium

Italy

Italy

Finland

Finland

Cyprus

Cyprus

Lithuania

Lithuania

Latvia

Latvia

Malta

Malta

Netherlands

Netherlands

Portugal

Portugal

Slovenia

Slovenia

Sweden

Sweden

Argentina

Argentina

Colombia

Colombia

Ecuador

Ecuador

Indonesia

Indonesia

Mexico

Mexico

New Zealand

New Zealand

Norway

Norway

South Korea

South Korea

Taiwan

Taiwan

Turkey

Turkey

Czechia

Czechia

Austria

Austria

Greece

Greece

Isle of Man

Isle of Man

Bulgaria

Bulgaria

Japan

Japan

Philippines

Philippines

Poland

Poland

Singapore

Singapore

Egypt

Egypt

Chile

Chile

Malaysia

Malaysia